Avalon Dataset

20 games played by 30 users across 19 unique teams. Over 24 hours of playtime with full game chat, game state, player beliefs, as well as persuasion and deception strategies.

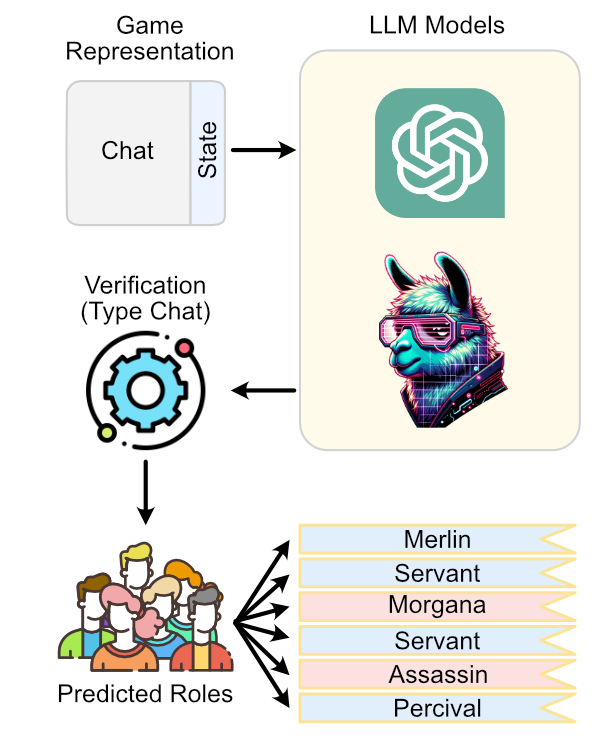

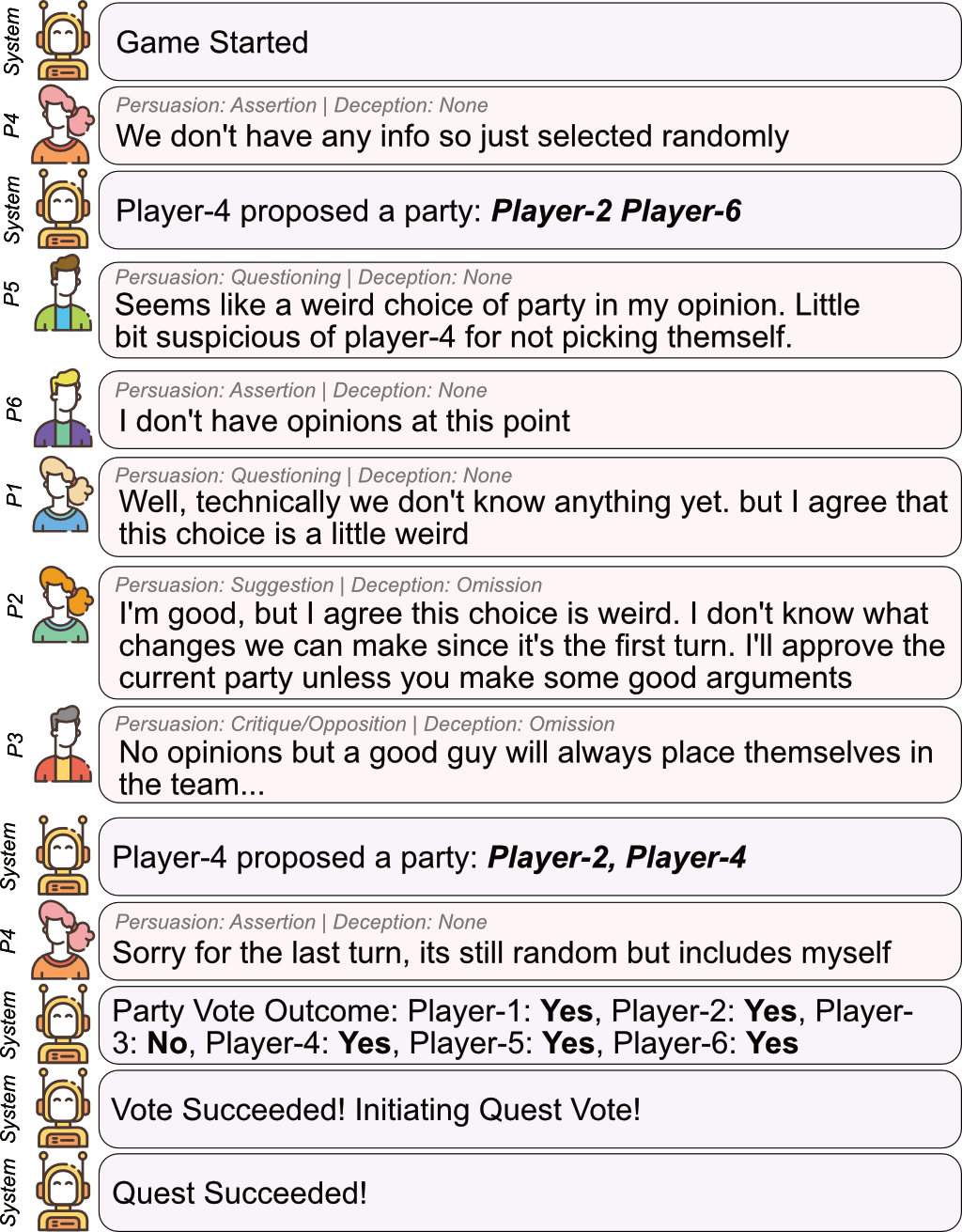

Deception and persuasion play a critical role in long-horizon dialogues between multiple parties, especially when the interests, goals, and motivations of the participants are not aligned. Such complex tasks pose challenges for current Large Language Models (LLM) as deception and persuasion can easily mislead them, especially in long-horizon multi-party dialogues. To this end, we explore the game of Avalon: The Resistance, a social deduction game in which players must determine each other's hidden identities to complete their team's objective. We introduce an online test bed and a dataset containing 20 carefully collected and labeled games among human players that exhibit long-horizon deception in a cooperative-competitive setting. We discuss the capabilities of LLMs to utilize deceptive long-horizon conversations between six human players to determine each player's goal and motivation. Particularly, we discuss the multimodal integration of the chat between the players and the game's state that grounds the conversation, providing further insights into the true player identities. We find that even current state-of-the-art LLMs do not reach human performance, making our dataset a compelling benchmark to investigate the decision-making and language-processing capabilities of LLMs.

20 games played by 30 users across 19 unique teams. Over 24 hours of playtime with full game chat, game state, player beliefs, as well as persuasion and deception strategies.

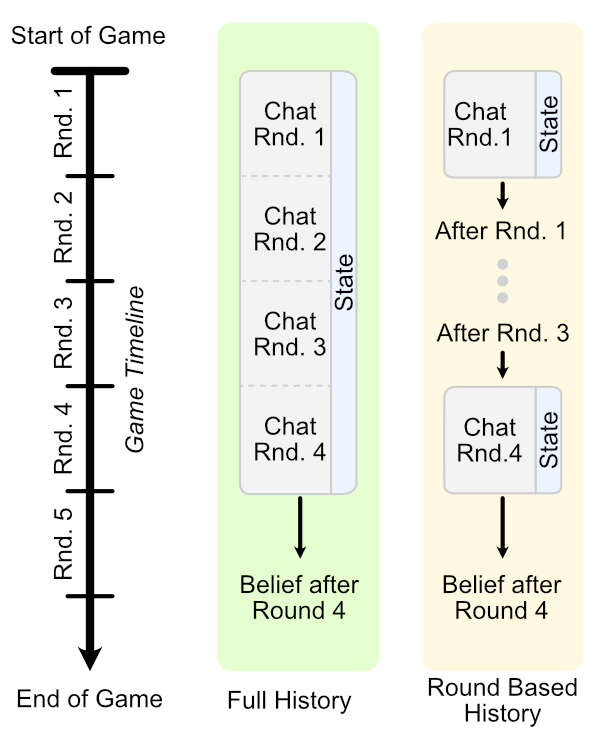

The game's context (i.e., chat and game state) is represented either from the beginning of the game (green), or in a round-based manner (yellow), utilizing a carried over belief of player roles.

We evaluate GPT-4, GPT-3.5, and Llama-2 (including fine-tune versions of GPT-3.5 and Llama-2) predicting each palyer's role and find that such model struggle with such complex NLU tasks.

6-minute video presentation of our work.

@inproceedings{stepputtis-etal-2023-long,

title = "Long-Horizon Dialogue Understanding for Role Identification in the Game of Avalon with Large Language Models",

author = "Stepputtis, Simon and

Campbell, Joseph and

Xie, Yaqi and

Qi, Zhengyang and

Zhang, Wenxin and

Wang, Ruiyi and

Rangreji, Sanketh and

Lewis, Charles and

Sycara, Katia",

editor = "Bouamor, Houda and

Pino, Juan and

Bali, Kalika",

booktitle = "Findings of the Association for Computational Linguistics: EMNLP 2023",

month = dec,

year = "2023",

address = "Singapore",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.findings-emnlp.748",

doi = "10.18653/v1/2023.findings-emnlp.748",

pages = "11193--11208",

}